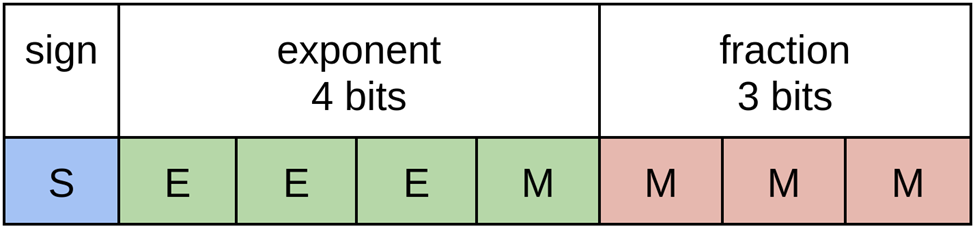

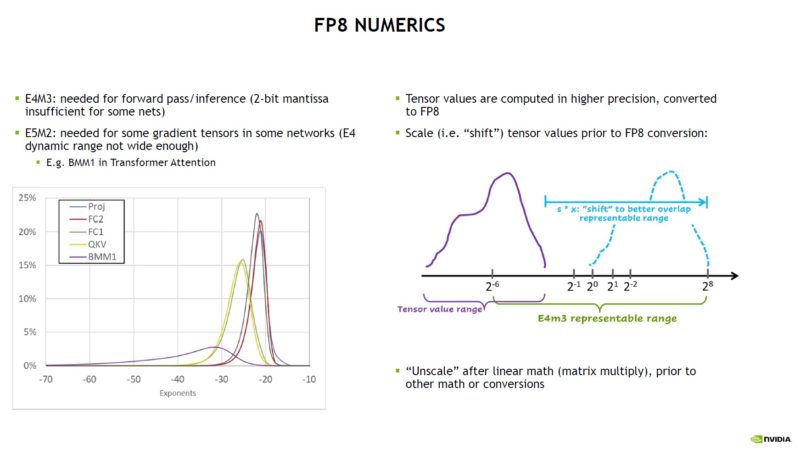

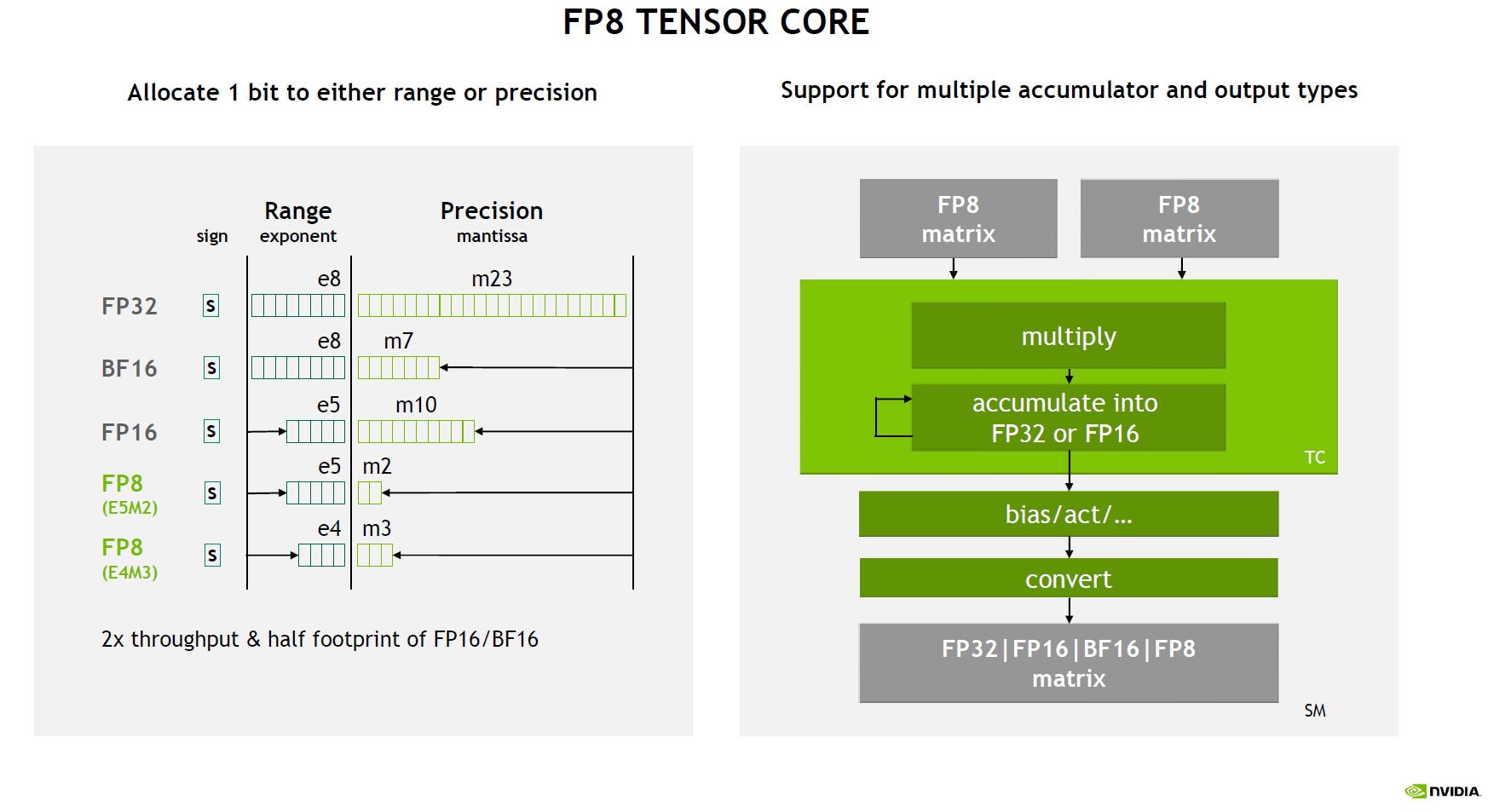

NVIDIA, Arm, and Intel Publish FP8 Specification for Standardization as an Interchange Format for AI | NVIDIA Technical Blog

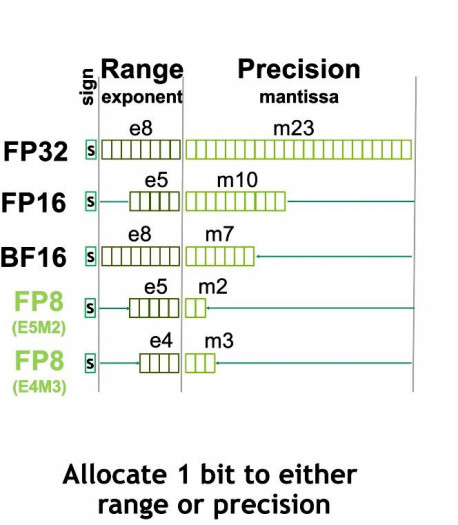

Erfolge mit Bfloat16 - Flexpoint, Bfloat16, TensorFloat32, FP8: Dank KI zu neuen Gleitkommazahlen - Golem.de

NVIDIA, Arm, and Intel Publish FP8 Specification for Standardization as an Interchange Format for AI | NVIDIA Technical Blog