pytorch - Transformers: How to use the target mask properly? - Artificial Intelligence Stack Exchange

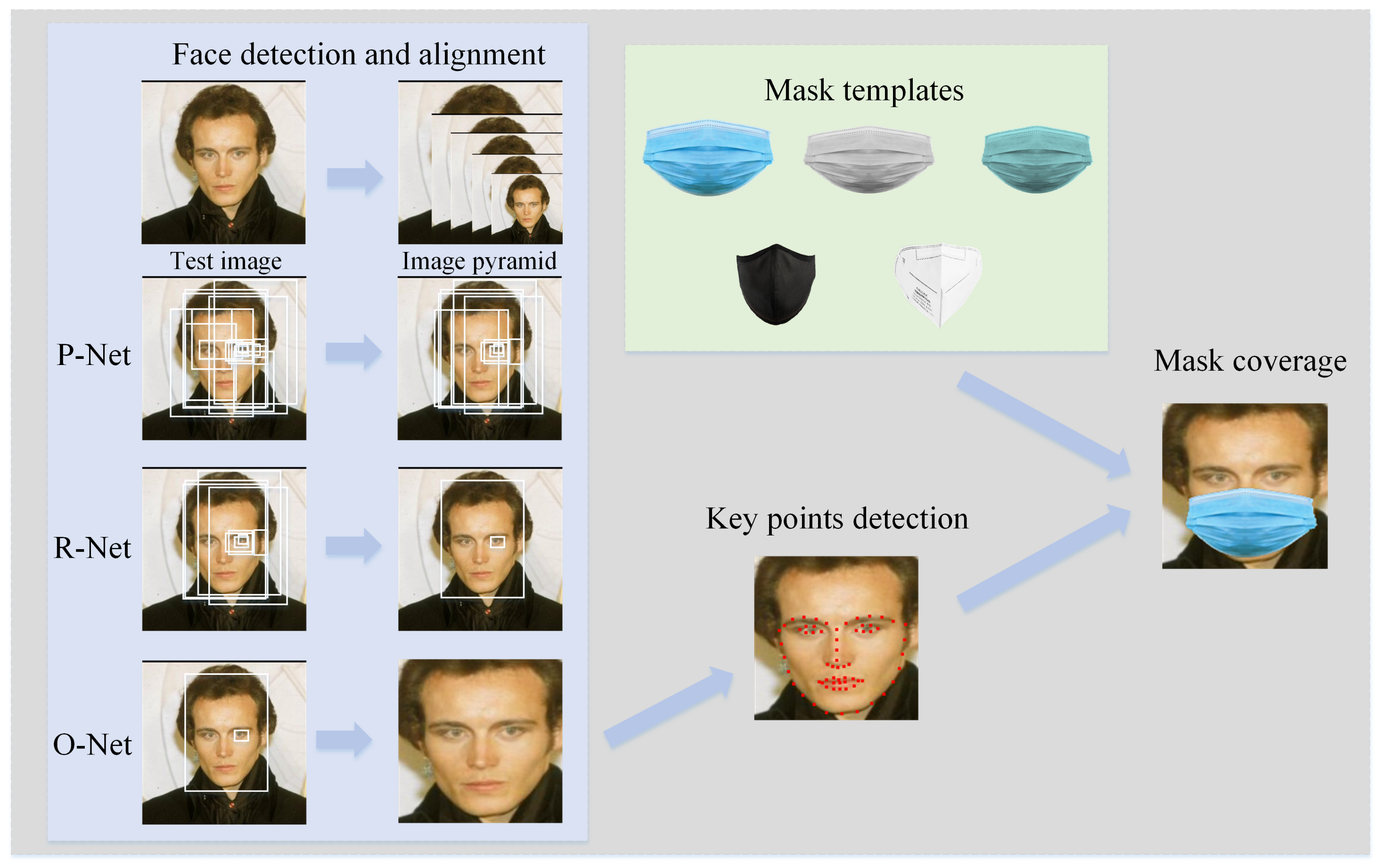

Applied Sciences | Free Full-Text | MFCosface: A Masked-Face Recognition Algorithm Based on Large Margin Cosine Loss

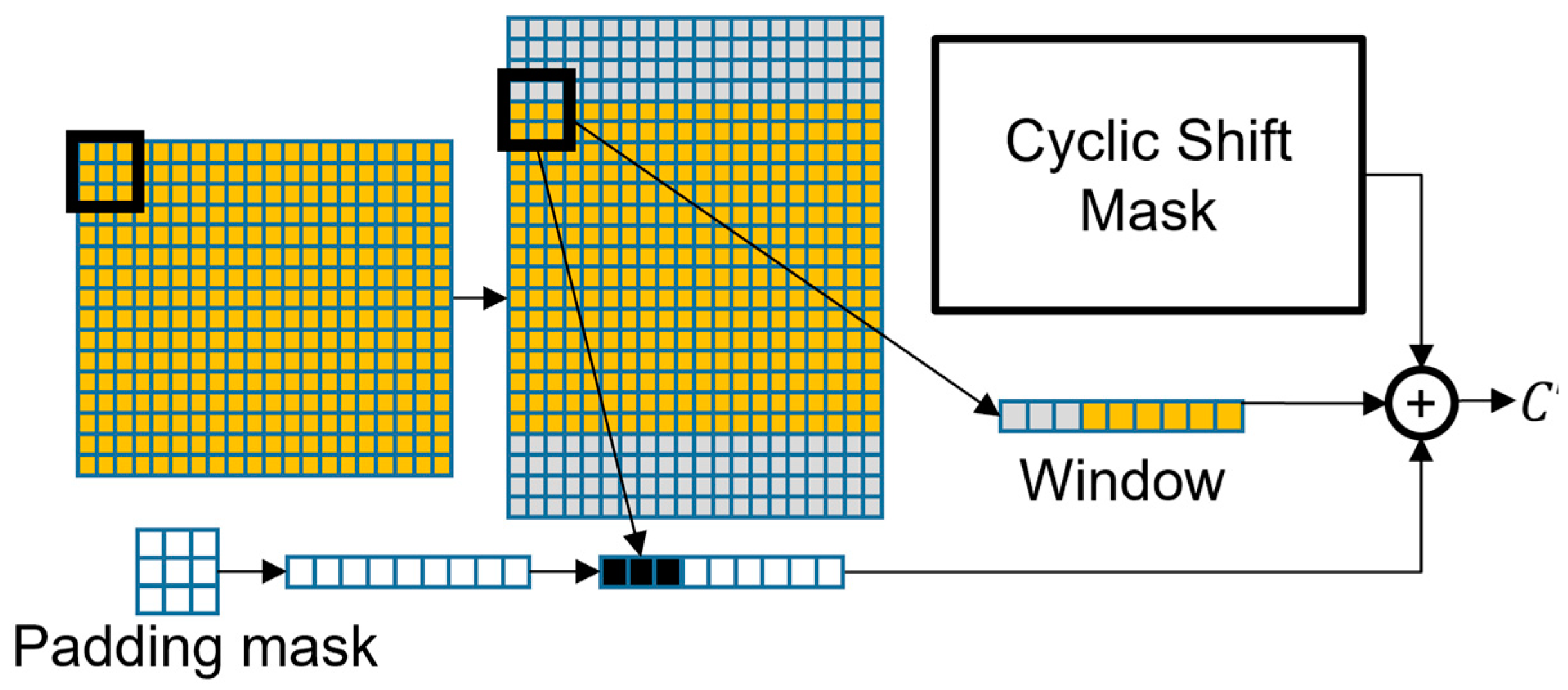

Generation of the Extended Attention Mask, by multiplying a classic... | Download Scientific Diagram

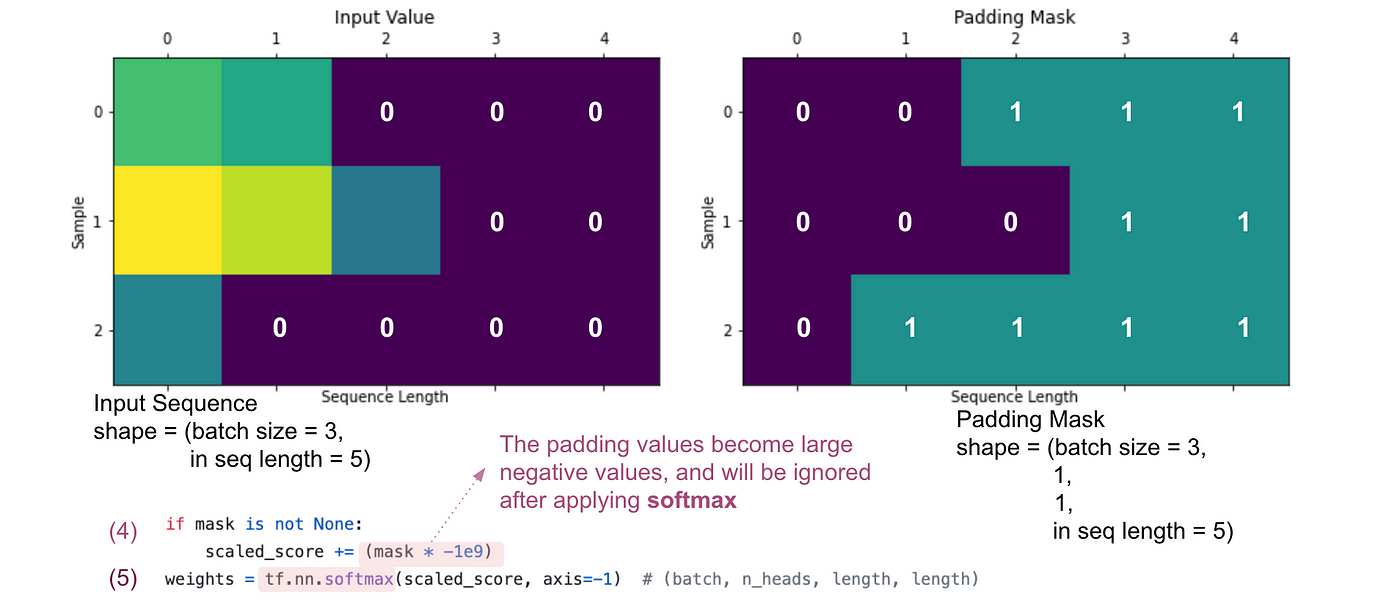

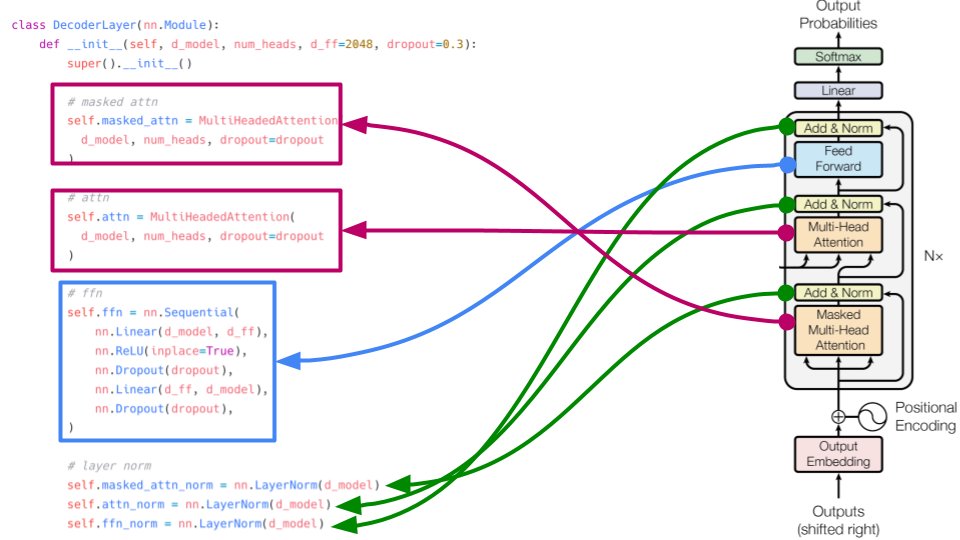

abhishek on X: "The decoder layer consists of two different types of attention. the masked version has an extra mask in addition to padding mask. We will come to that. The normal

Amazon.com : Mueller Sports Medicine Face Guard, Nose Guard for Sports, Adjustable Face Mask with Foam Padding for Men and Women, One Size, Clear : Sports & Outdoors

Remote Sensing | Free Full-Text | Imitation Learning through Image Augmentation Using Enhanced Swin Transformer Model in Remote Sensing

a) Each amino acid is encoded as a 1 to 20 numeric number, inclusive,... | Download Scientific Diagram

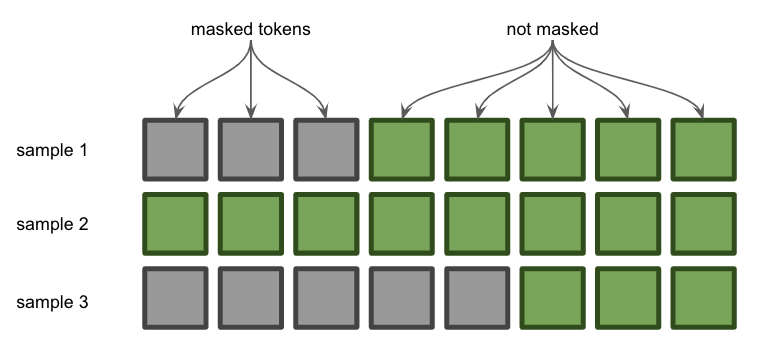

Feature request] Query padding mask for nn.MultiheadAttention · Issue #34453 · pytorch/pytorch · GitHub

![pytorch] 🐱👤Transformer on! pytorch] 🐱👤Transformer on!](https://velog.velcdn.com/images/gtpgg1013/post/cb08698a-bfd5-4bc8-adaa-ad857724c069/image.png)

![N_2.A1.2 [IMPL] Build GPT-2 Model - Deep Learning Bible - 3. Natural Language Processing - 한글 N_2.A1.2 [IMPL] Build GPT-2 Model - Deep Learning Bible - 3. Natural Language Processing - 한글](https://wikidocs.net/images/page/161978/Data_eng_n_Build_GPT_2_Gray_Data.png)